Workflow: Analysis of Acquired Resistance to ICI in NSCLC

In this Jupyter notebook, we outline the methodology used in our recent research (Ricciuti et al.) published in the Journal of Clinical Oncology (JCO). We present the step-by-step process used to analyze the Whole Slide Images (WSIs) of Non-Small Cell Lung Cancer (NSCLC) samples. Our focus is on identifying Tumor Infiltrating Lymphocytes (TILs) and analyzing their spatial distribution to understand the mechanisms of acquired resistance to Immune Checkpoint Inhibitors (ICI).

Utilizing the PathML toolkit, the study processed whole-slide images by segmenting them into tiles for analysis with a machine learning algorithm, which was tasked with identifying and categorizing various cell types present in the tumor microenvironment, such as lymphocytes, epithelial cells, macrophages, and neutrophils.

After categorizing these cells, the study constructed K-nearest neighbor (KNN) and minimum spanning tree graphs based on the spatial proximity of the identified cell types. The features extracted from these graphs were subjected to statistical evaluations such as Wilcoxon signed-rank test, to quantify differences in cellular distributions and densities.

The analysis revealed a notable reduction in the density of tumor-infiltrating lymphocytes (TILs) following Immune Checkpoint Inhibitors (ICI) therapy (median from 88 to 36 cells/mm^2, P = 0.02). Conversely, no significant change was observed in the TIL density between pre- and post-treatment samples from chemotherapy or targeted therapy patients (P = 0.62 and P = 0.51, respectively). Additionally, there was no significant variation in the density of tumor-infiltrating macrophages or neutrophils across treatments (immunotherapy, chemotherapy, or targeted therapy). These observations highlighted a significant level of genomic and immunophenotypic variation within NSCLC cases that developed acquired resistance (AR) to ICIs.

Reference:

Ricciuti, B., Lamberti, G., Puchala, S.R., Mahadevan, N.R., Lin, J.R., Alessi, J.V., Chowdhury, A., Li, Y.Y., Wang, X., Spurr, L. and Pecci, F., 2024. Genomic and immunophenotypic landscape of acquired resistance to PD-(L) 1 blockade in non–small-cell lung cancer. Journal of Clinical Oncology, pp.JCO-23.

Notebook Outline:

Initialization: Setting up the environment by importing necessary libraries and defining essential functions.

Model initialization and Data Loading: Loading the model and WSI data for analysis.

Model Inference and Detection of Cell Types: Demonstrating the use of the Inference API to deploy a pre-trained HoVerNet model for the detection of lymphocytes in histopathological images.

Graph Construction and Feature Extraction: Building spatial graphs based on the detected Lymphocytes to analyze their arrangement. Extracting features from the constructed graphs and training baseline machine learning models to distinguish between pre- and post-ICI treatment samples.

Conclusion and Further Steps (Potential for Advanced Analysis): While this notebook focuses on basic graph construction methods, we acknowledge the extensive capabilities of the Graph API, which can be leveraged to build more sophisticated graph models, thus driving forward the research in this domain.

Initialization

Import Libraries

Here we import the necessary libraries that will be used throughout this notebook. These libraries provide us with the tools required for image processing, graph analysis, and machine learning.

[1]:

# Standard library imports

import os

import traceback

from glob import glob

import warnings

import math

import numpy as np

import torch

from torch.nn import functional as F

import cv2

from skimage.measure import regionprops, label

import networkx as nx

import h5py

from tqdm import tqdm

from dask.distributed import Client, LocalCluster

import matplotlib.pyplot as plt

from PIL import Image, ImageDraw

os.environ["JAVA_HOME"] = "/opt/conda/envs/pathml/"

# PathML related imports

from pathml.core import HESlide, SlideData, Tile

from pathml.preprocessing.transforms import Transform

from pathml.preprocessing import Pipeline

import pathml.core.tile

from pathml.utils import pad_or_crop

from pathml.ml import HoVerNet, loss_hovernet, post_process_batch_hovernet

from pathml.ml.hovernet import (

_post_process_single_hovernet,

extract_nuclei_info,

group_centroids_by_type,

)

from pathml.inference import Inference, InferenceBase

from pathml.ml.utils import center_crop_im_batch

from pathml.graph.preprocessing import (

KNNGraphBuilder,

MSTGraphBuilder,

GraphFeatureExtractor,

BaseGraphBuilder,

)

/var/tmp/ipykernel_30119/432525869.py:29: DeprecationWarning: Importing from 'pathml.ml.hovernet' is deprecated and will be removed in a future version. Please use 'pathml.ml.models.hovernet' instead.

from pathml.ml.hovernet import _post_process_single_hovernet, extract_nuclei_info, group_centroids_by_type

[2]:

# class to handle remote onnx models

class HoVerNetInference(Inference):

"""Transformation to run inferrence on ONNX model.

Citation for model:

Pocock J, Graham S, Vu QD, Jahanifar M, Deshpande S, Hadjigeorghiou G, Shephard A, Bashir RM, Bilal M, Lu W, Epstein D.

TIAToolbox as an end-to-end library for advanced tissue image analytics. Communications medicine. 2022 Sep 24;2(1):120.

Args:

model_path (str): temp file name to download onnx from huggingface,

input_name (str): name of the input the ONNX model accepts

"""

def __init__(

self,

model_path="temp.onnx",

input_name="data",

num_classes=5,

model_type="Segmentation",

local=True,

mask_name="cell",

):

super().__init__(model_path, input_name, num_classes, model_type, local)

self.model_card["num_classes"] = self.num_classes

self.model_card["model_type"] = self.model_type

self.model_card["name"] = "Tiabox HoverNet Test"

self.model_card["model_input_notes"] = "Accepts tiles of 256 x 256"

self.model_card["citation"] = (

"Pocock J, Graham S, Vu QD, Jahanifar M, Deshpande S, Hadjigeorghiou G, Shephard A, Bashir RM, Bilal M, Lu W, Epstein D. TIAToolbox as an end-to-end library for advanced tissue image analytics. Communications medicine. 2022 Sep 24;2(1):120."

)

self.mask_name = mask_name

def __repr__(self):

return "Class to handle remote TIAToolBox HoverNet test ONNX. See model card for citation."

def F(self, image):

# run inference function

prediction_map = self.inference(image)

return prediction_map

def apply(self, tile):

assert isinstance(tile, pathml.core.tile.Tile), "Input must be a Tile object"

assert tile.slide_type.stain == "HE", "Tile must be H&E stained"

# Run ONNX inference

model_output = self.F(tile.image)

self.modeloutput_trf = [

torch.tensor(model_output[1]),

torch.tensor(model_output[2]),

torch.tensor(model_output[0]),

] # NC, NP, HV to NP, HV, NC: ONNX to PostProcFunc in PathML

# Post-process model output

nucleus_mask, prediction_map, nc_out = post_process_batch_hovernet(

self.modeloutput_trf, n_classes=5, return_nc_out_preds=True

)

tile.image = pad_or_crop(tile.image, (164, 164))

# Transpose the pred_map and nc_out to bring 164, 164 to the beginning

if isinstance(prediction_map, np.ndarray):

prediction_map = np.transpose(

prediction_map, (2, 3, 0, 1)

) # New shape: (164, 164, 1, 5)

else: # Assuming it's a PyTorch tensor

prediction_map = prediction_map.permute(

2, 3, 0, 1

) # New shape: (164, 164, 1, 5)

if isinstance(nc_out, np.ndarray):

nc_out = np.transpose(nc_out, (1, 2, 0)) # New shape: (164, 164, 1)

else: # Assuming it's a PyTorch tensor

nc_out = nc_out.permute(1, 2, 0) # New shape: (164, 164, 1)

# Update the masks in img_tile

tile.masks[self.mask_name] = nucleus_mask[0]

tile.masks["pred_map"] = prediction_map

tile.masks["nc_out"] = nc_out

def remove(self):

# remove the temp.onnx model

os.remove(self.model_path)

Model initialization and Data Loading

Initialize Inference

We initialize the HoVerNet model, which has been pre-trained on the MoNuSAC dataset. The model is in ONNX format and will be loaded using the Inference API to perform inference on the WSI slides.

Model Overview

The model utilized in this study is the HoVer-Net, a deep learning architecture specifically designed for simultaneous segmentation and classification of nuclei in histology images. The implementation is sourced from the TIAToolbox, as cited in the model card. This model is proficient in distinguishing various cell types within histopathological slides, making it an ideal choice for our research focusing on TILs.

[3]:

hvinf = HoVerNetInference(model_path="../hovernet_monusac.onnx")

[4]:

hvinf.model_card

[4]:

{'name': 'Tiabox HoverNet Test',

'num_classes': 5,

'model_type': 'Segmentation',

'notes': None,

'model_input_notes': 'Accepts tiles of 256 x 256',

'model_output_notes': None,

'citation': 'Pocock J, Graham S, Vu QD, Jahanifar M, Deshpande S, Hadjigeorghiou G, Shephard A, Bashir RM, Bilal M, Lu W, Epstein D. TIAToolbox as an end-to-end library for advanced tissue image analytics. Communications medicine. 2022 Sep 24;2(1):120.'}

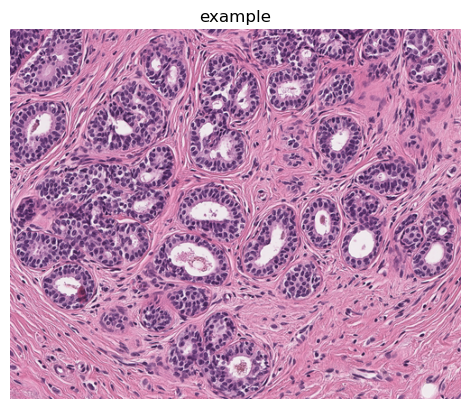

Load and Display Example Image

[5]:

wsi = SlideData(

"../../data/data/example_0_N_0.png", name="example", backend="openslide", stain="HE"

)

[6]:

wsi.plot(), wsi.shape

[6]:

(None, (1949, 2377))

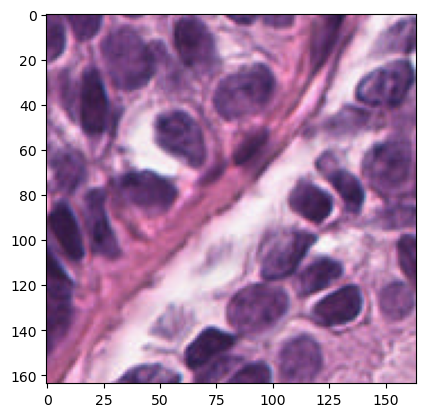

[9]:

region = wsi.slide.extract_region(location=(0, 0), size=(256, 256))

plt.imshow(region)

plt.title("Input image", fontsize=11)

plt.gca().set_xticks([])

plt.gca().set_yticks([])

plt.show()

SlideData and Tile Initialization

With the image loaded, we encapsulate it within a Tile object, which will be used to apply inference with pre-trained model.

[10]:

img_tile = Tile(region, coords=(0, 0), stain="HE")

Apply Inference to Tile

We apply the HoVerNet model to the Tile object to perform cell type identification.

[11]:

hvinf.apply(img_tile)

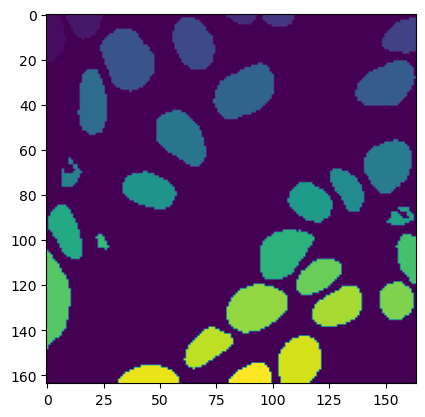

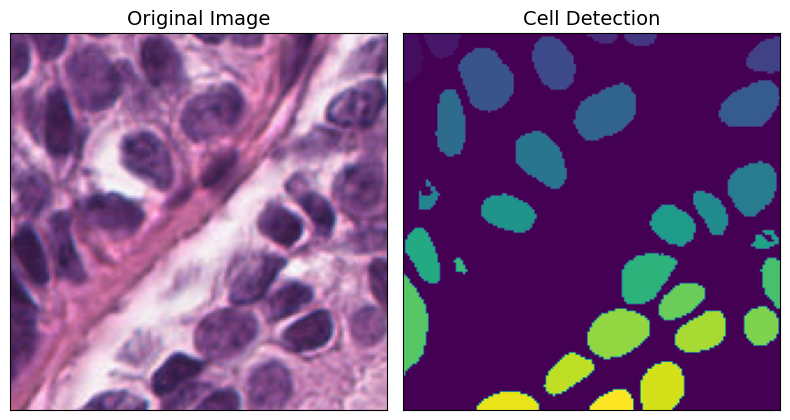

Plotting the Segmented Cells

Here we visualize the segmentation results by overlaying the detected cell masks on the image.

[12]:

# Plotting image

plt.imshow(img_tile.image)

[12]:

<matplotlib.image.AxesImage at 0x7fd4f428f3a0>

[13]:

# Below image plots thje cell segmentations

plt.imshow(img_tile.masks["cell"])

[13]:

<matplotlib.image.AxesImage at 0x7fd4f420b0d0>

[14]:

# plot the original and cell segmented image

fontsize = 14

fig, axarr = plt.subplots(nrows=1, ncols=2, figsize=(8, 8))

axarr[0].imshow(img_tile.image)

axarr[0].set_title("Original Image", fontsize=fontsize)

axarr[1].imshow(img_tile.masks["cell"])

axarr[1].set_title("Cell Detection", fontsize=fontsize)

for ax in axarr.ravel():

ax.set_yticks([])

ax.set_xticks([])

plt.tight_layout()

plt.show()

[15]:

# extract the cell segmented mask from our tile

nuclei_map = img_tile.masks["cell"]

# uniquely label each cell in the mask and record the centroids for each cell

label_instance_map = label(nuclei_map)

regions = regionprops(label_instance_map)

instance_centroids = np.empty((len(regions), 2))

Model Inference and Detection of Cell Types

This section details how we run the inference pipeline over the whole slide image and how to handle the outputs.

HoVer-Net Inference

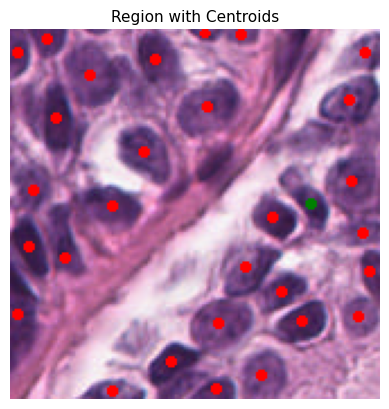

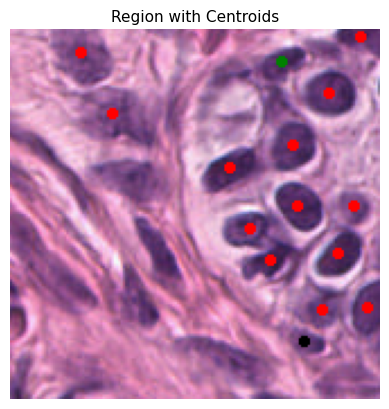

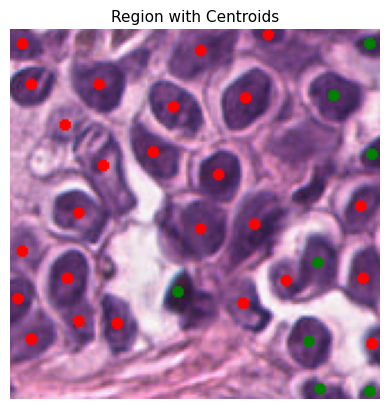

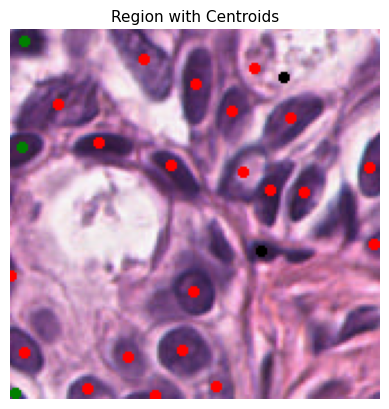

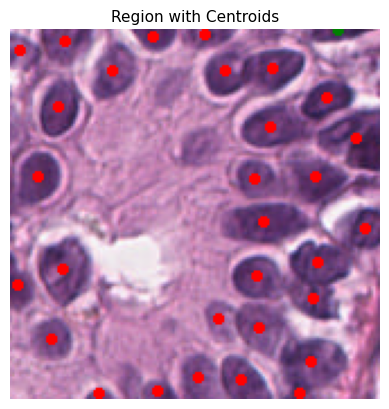

The inference pipeline is executed using a pre-trained HoVer-Net model, which predicts the presence and type of cells on a given tile from the WSI. Below you can the see code that plots the actual tile image and the overlaid centroid locations.

Each cell is represented by a specific color code for easy identification:

0: Background - Black

1: Epithelial - Red

2: Lymphocyte - Green

3: Macrophage - Blue

4: Neutrophil - Yellow

Run Inference Pipeline on Whole Slide Images

Using a pipeline, we can systematically process the tiles of the WSI and perform inference on each.

[16]:

wsi = SlideData(

"../../data/data/example_0_N_0.png", name="example", backend="openslide", stain="HE"

)

[17]:

# cloud compute or a cluster using dask.distributed.

cluster = LocalCluster(n_workers=10, threads_per_worker=1, processes=True)

client = Client(cluster)

[18]:

# Execute pipeline by tiling and applying the inference on tiles.

pipeline = Pipeline([HoVerNetInference(model_path="../hovernet_monusac.onnx")])

# Run the Inference Pipeline

wsi.run(

pipeline,

tile_size=256,

tile_stride=164,

tile_pad=True,

distributed=True,

client=client,

)

[19]:

wsi.shape

[19]:

(1949, 2377)

Saving the Results

The results of the inference can be saved for further analysis.

[20]:

# Save it to a file.

wsi.write('./example_pred_out_padded.h5')

Graph Construction and Feature Extraction

Graph-based analysis is a powerful approach to study the spatial patterns of cells. Here, we construct two types of graphs: K-Nearest Neighbors (KNN) and KNN combined with Minimum Spanning Tree (KNN+MST).

Group and Rescale Centroids

Centroids are grouped by cell type and rescaled according to the original image dimensions.

[21]:

def rescale_centroids(centroids, original_size, cropped_size, patch_position=(0, 0)):

"""

Rescale centroids from a cropped patch to their original position in the larger image.

Args:

centroids (list of tuples): List of centroids in the cropped image (x, y format).

original_size (tuple): The size of the original image (width, height).

cropped_size (tuple): The size of the cropped image (width, height).

patch_position (tuple): The top-left position of the patch in the original image (x, y format).

Returns:

List of tuples: Rescaled centroids in the original image.

"""

offset_x = (original_size[0] - cropped_size[0]) // 2

offset_y = (original_size[1] - cropped_size[1]) // 2

rescaled_centroids = []

for centroid in centroids:

# Adjust for the cropping and then for the position in the original image

rescaled_x = centroid[0] + offset_x + patch_position[1]

rescaled_y = centroid[1] + offset_y + patch_position[0]

rescaled_centroids.append((rescaled_x, rescaled_y))

return rescaled_centroids

[22]:

def plot_centroids_on_region(

extracted_region, rescaled_dict, dot_size=2, color_map=None

):

"""

Plot colored dots on the extracted region of an image at specified centroid locations.

Args:

extracted_region (numpy.ndarray): The extracted region of the image.

grouped_centroids (dict): Dictionary of centroids grouped by cell type.

extraction_location (tuple): The top-left (x, y) coordinates where the region was extracted from the full image.

dot_size (int): Size of the dot for each centroid. Default is 5.

color_map (dict): Mapping of cell types to colors.

"""

if not isinstance(extracted_region, np.ndarray):

raise ValueError("extracted_region must be a numpy array.")

if not isinstance(rescaled_dict, dict):

raise ValueError("rescaled_dict must be a dictionary.")

if color_map is None:

# Default color map

color_map = {0: "black", 1: "red", 2: "green", 3: "blue", 4: "yellow"}

# Convert numpy array to PIL Image for drawing

region_image = Image.fromarray(np.uint8(extracted_region))

draw = ImageDraw.Draw(region_image)

# Draw a colored dot for each centroid based on its cell type

for cell_type, centroids in rescaled_dict.items():

for centroid in centroids:

x, y = centroid[0], centroid[1]

# Include centroid only if it falls within the extracted region

if (

0 <= x < extracted_region.shape[1]

and 0 <= y < extracted_region.shape[0]

):

draw.ellipse(

[(x - dot_size, y - dot_size), (x + dot_size, y + dot_size)],

fill=color_map[cell_type],

)

# Convert back to numpy array for plotting

region_with_centroids = np.array(region_image)

plt.imshow(region_with_centroids)

plt.title("Region with Centroids", fontsize=11)

plt.axis("off")

plt.show()

To ensure the reliability of our data, we only consider cells with a detection probability above a certain threshold. This minimizes false positives and refines our dataset for the construction of spatial graphs. Here, the probability_threshold is set to 0.5

[23]:

# Initialize the dictionary to accumulate all rescaled centroids

all_rescaled_centroids = {0: [], 1: [], 2: [], 3: [], 4: []} # Assuming 5 cell types

temp_key = {}

for idx, tile_key in enumerate(wsi.tiles.keys):

try:

curr_tile = wsi.tiles[tile_key]

extraction_location = curr_tile.coords

# if extraction_location != (1148, 492):

# continue

# Get cell information and group centroids

centers = extract_nuclei_info(

curr_tile.masks["cell"], curr_tile.masks["nc_out"]

)

# Different probability thresholds for different purposes

prob_threshold_plot = 0.1

prob_threshold_accumulate = 0.5

# Group centroids for plotting

grouped_centroids_plot = group_centroids_by_type(centers, prob_threshold_plot)

# Group centroids for accumulation

grouped_centroids_accumulate = group_centroids_by_type(

centers, prob_threshold_accumulate

)

temp_key[extraction_location] = {}

# Rescale centroids for each cell type and accumulate

for cell_type, centroids in grouped_centroids_accumulate.items():

formatted_centroids = [(c[0], c[1]) for c in centroids]

rescaled_ctr = rescale_centroids(

formatted_centroids,

original_size=(256, 256),

cropped_size=(164, 164),

patch_position=extraction_location,

)

all_rescaled_centroids[cell_type].extend(rescaled_ctr)

temp_key[extraction_location][cell_type] = rescaled_ctr

# Plotting logic

if idx <= 5:

rescaled_dct_for_plot = {}

for cell_type, centroids in grouped_centroids_plot.items():

formatted_centroids = [(c[0], c[1]) for c in centroids]

# rescaled_ctr = rescale_centroids(formatted_centroids, original_size=(256,256), cropped_size=(164,164), patch_position=extraction_location)

# rescaled_dct_for_plot[cell_type] = rescaled_ctr

rescaled_dct_for_plot[cell_type] = formatted_centroids

print(f"Tile ID: {tile_key}")

plot_centroids_on_region(curr_tile.image, rescaled_dct_for_plot)

print(np.unique(curr_tile.masks["nc_out"]))

# break

except Exception as e:

print(f"An error occurred processing tile {tile_key}: {e}")

Tile ID: (0, 0)

[0. 1. 2.]

Tile ID: (0, 1148)

[0. 1. 2.]

Tile ID: (0, 1312)

[0. 1. 2.]

Tile ID: (0, 1476)

[0. 1. 2.]

Tile ID: (0, 164)

[0. 1. 2.]

Tile ID: (0, 1640)

[0. 1. 2.]

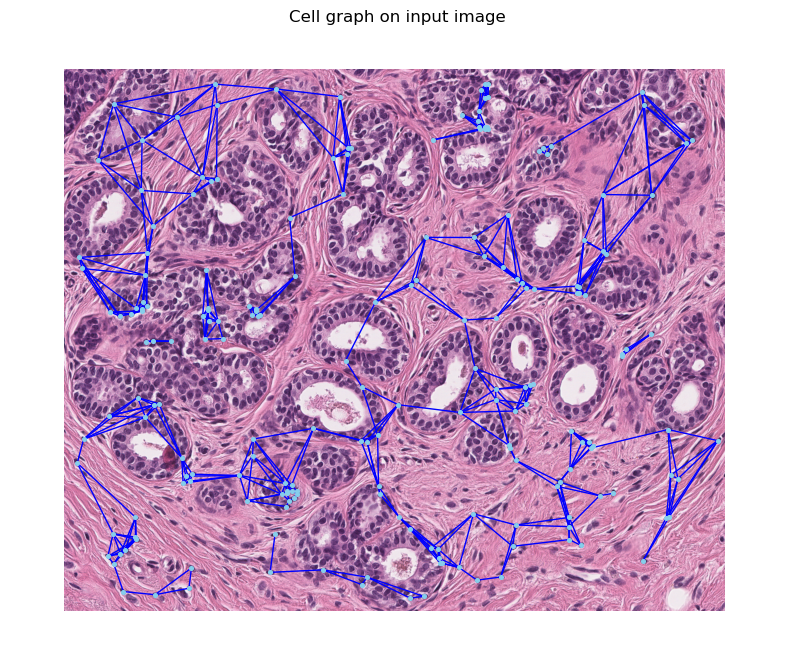

Graph Construction

Using the processed centroids, KNN and KNN+MST graphs are generated. The KNN graph links each cell to its nearest neighbors, forming a network that reflects the local cellular architecture. The KNN+MST graph, on the other hand, is a reduced form that retains the most significant connections based on the minimum spanning tree algorithm, emphasizing the most prominent pathways among cells.

To facilitate the construction of these graphs, we utilize the CentroidGraphBuilder from our graph API. This allows us to efficiently generate both KNN and KNN+MST graphs based solely on the centroid locations of the cells.

[27]:

lymphocyte_centroids = all_rescaled_centroids[2]

len(lymphocyte_centroids)

[27]:

202

[28]:

knn_graphbuilder = KNNGraphBuilder(k=5, return_networkx=False)

knnmst_graphbuilder = MSTGraphBuilder(k=5, return_networkx=False)

[29]:

knn_graph = knn_graphbuilder.process_with_centroids(np.array(lymphocyte_centroids))

knnmst_graph = knnmst_graphbuilder.process_with_centroids(

np.array(lymphocyte_centroids)

)

[30]:

len(lymphocyte_centroids)

[30]:

202

[32]:

import matplotlib.pyplot as plt

import networkx as nx

from torch_geometric.utils.convert import to_networkx

def plot_graph_on_image(graph, image):

# Create a figure and an axis for plotting

fig, ax = plt.subplots(figsize=(10, 10))

# Convert the PyTorch geometric graph to a NetworkX graph

G = to_networkx(graph, to_undirected=True)

pos = graph.node_centroids

# Plot the image on the axis

ax.imshow(image, cmap="cubehelix")

# Draw the graph on the same axis

nx.draw(

G,

pos,

ax=ax,

node_size=7,

with_labels=False,

font_size=8,

font_color="white",

node_color="skyblue",

edge_color="blue",

)

# Set the background color of the axis and remove ticks

ax.set_facecolor("black")

ax.set_xticks([])

ax.set_yticks([])

# Set the title of the plot

ax.set_title("Cell graph on input image")

# Display the plot

plt.show()

[33]:

image_path = "../../data/data/example_0_N_0.png"

# Load the image with PIL

image = Image.open(image_path)

# Convert the PIL image to a NumPy array

image_array = np.array(image)

[34]:

plot_graph_on_image(knn_graph, image_array)

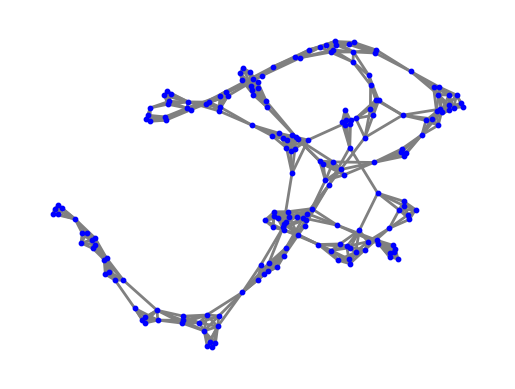

Extracting Graph Features

Once these graphs are constructed, we can extract features from them using the GraphFeatureExtractor. The extracted features provide insights into the structural organization of TILs in the tissue. The features extracted from the graphs include basic graph properties, degree measures, centrality, constraint and coreness measures which are indicative of the immune response’s robustness.

[35]:

knn_graphbuilder = KNNGraphBuilder(k=5, return_networkx=True)

knnmst_graphbuilder = MSTGraphBuilder(k=5, return_networkx=True)

[36]:

knn_graph = knn_graphbuilder.process_with_centroids(np.array(lymphocyte_centroids))

knnmst_graph = knnmst_graphbuilder.process_with_centroids(

np.array(lymphocyte_centroids)

)

[37]:

graph_feats = GraphFeatureExtractor(use_weight=False)

graph_feats.process(knn_graph.to_undirected())

[37]:

{'diameter': 23,

'radius': 12,

'assortativity_degree': 0.12596787074745247,

'density': 0.03182109255701689,

'transitivity_undir': 0.6129032258064516,

'hubs_mean': 0.0049504950495049506,

'hubs_median': 1.972988056537529e-05,

'hubs_max': 0.08790528425448371,

'hubs_min': 1.5566236464610336e-09,

'hubs_sum': 1.0,

'hubs_std': 0.01557903212606235,

'authorities_mean': 0.004950495049504951,

'authorities_median': 1.9729880565326968e-05,

'authorities_max': 0.08790528425448432,

'authorities_min': 1.556623615030426e-09,

'authorities_sum': 1.0000000000000002,

'authorities_std': 0.015579032126062464,

'constraint_mean': 0.3821528228713704,

'constraint_median': 0.36468192355551443,

'constraint_max': 0.5980641975308644,

'constraint_min': 0.20813865909875606,

'constraint_sum': 77.19487022001682,

'constraint_std': 0.0902774596695133,

'coreness_mean': 5.0,

'coreness_median': 5.0,

'coreness_max': 5,

'coreness_min': 5,

'coreness_sum': 1010,

'coreness_std': 0.0,

'egvec_centr_mean': 0.021388076539739002,

'egvec_centr_median': 0.00014641277844975486,

'egvec_centr_max': 0.3783534589344582,

'egvec_centr_min': 8.423939118380628e-09,

'egvec_centr_sum': 4.3203914610272784,

'egvec_centr_std': 0.06703018149636189,

'degree_mean': 6.396039603960396,

'degree_median': 6.0,

'degree_max': 11,

'degree_min': 5,

'degree_sum': 1292,

'degree_std': 1.5226293563593762,

'personalized_pgrank_mean': 0.00495049504950495,

'personalized_pgrank_median': 0.00466076905726555,

'personalized_pgrank_max': 0.007880915260106189,

'personalized_pgrank_min': 0.0037251677437948934,

'personalized_pgrank_sum': 0.9999999999999998,

'personalized_pgrank_std': 0.0009142006637043169}

[38]:

def plot_graph(graph):

pos = nx.spring_layout(graph, scale=2)

nx.draw_networkx_nodes(graph, pos, node_size=10, node_color="blue")

nx.draw_networkx_edges(graph, pos, width=2, edge_color="grey")

plt.axis("off")

plt.show()

[39]:

plot_graph(knn_graph.to_undirected())

Conclusion and Further Steps

In summary, this notebook has detailed the application of Inference and Graph techniques to study the spatial distribution of TILs (Tumor Infiltrating Lymphocytes) in NSCLC samples. The approach demonstrated here can be extended to a larger set of WSIs for a comprehensive analysis.

Modeling and Statistical Testing

Moving beyond the scope of this notebook, the extracted graph features can be used to train predictive models or to perform hypothesis testing. For instance, conducting t-tests or Mann-Whitney U tests on features extracted from pre- and post-ICI treatment samples can highlight significant changes attributed to the treatment, providing valuable insights into the mechanisms of acquired resistance.

Extending Analysis with Advanced Graph Techniques

In addition to the foundational methods demonstrated, we can significantly enhance our analysis by incorporating advanced methods from the PathML Graph API as described in the “construct_graphs” notebook. These advanced techniques include:

Utilizing HoVer-Net for precise cell detection in specific Regions of Interest (ROI).

Employing boundary detection for intricate tissue identification.

Featurizing detected cells and tissues using ResNet models.

Constructing cell and tissue graphs with k-Nearest Neighbor (k-NN) and Region-Adjacency Graph (RAG) methods.

References

Pocock, J., Graham, S., Vu, Q. D., Jahanifar, M., Deshpande, S., Hadjigeorghiou, G., Shephard, A., Bashir, R. M. S., Bilal, M., Lu, W., Epstein, D., Minhas, F., Rajpoot, N. M., & Raza, S. E. A. (2022). TIAToolbox as an end-to-end library for advanced tissue image analytics. Communications Medicine, 2(1), 120. https://www.nature.com/articles/s43856-022-00186-5. DOI: 10.1038/s43856-022-00186-5.

Graham, S., Vu, Q. D., Raza, S. E. A., Azam, A., Tsang, Y. W., Kwak, J. T., & Rajpoot, N. (2019). HoVer-Net: Simultaneous segmentation and classification of nuclei in multi-tissue histology images. Medical Image Analysis, 58, 101563. https://www.sciencedirect.com/science/article/abs/pii/S1361841519301045.